Researchers break robot 'emotional barrier'

Wuhan engineers develop system that identifies, mimics human expressions

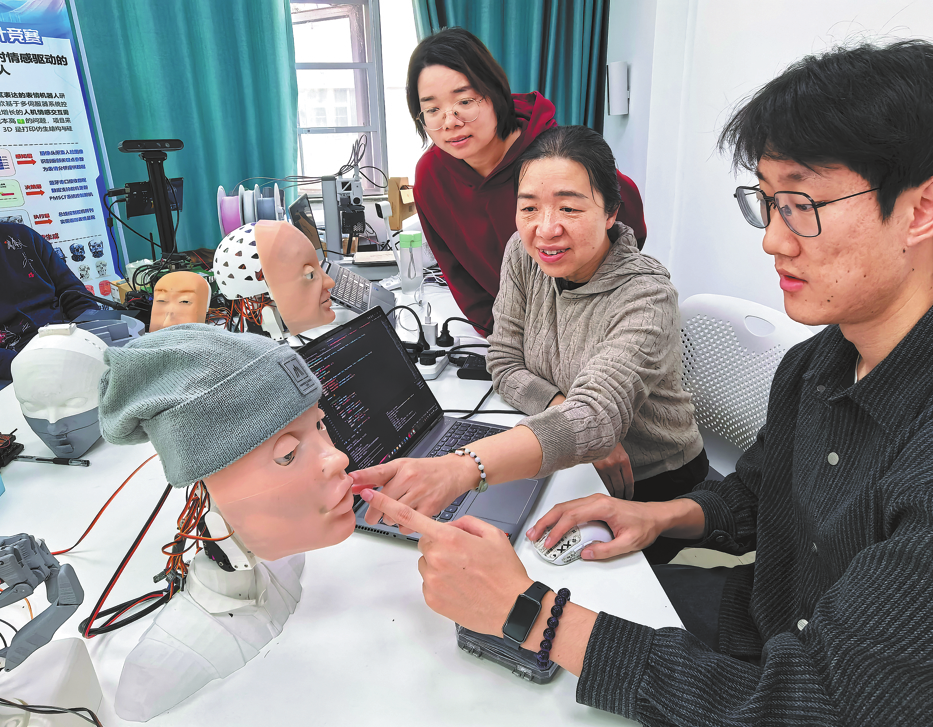

Engineers at the Huazhong University of Science and Technology in Wuhan, Hubei province, have developed a breakthrough robotic system capable of identifying and mimicking complex human emotions, a significant leap toward bridging the communication gap between humans and machines.

The research, led by professor Yu Li, utilizes high-precision algorithms to decode the "visual language" of the human face. By identifying distinct facial "action units" — the subtle muscle movements of the eyes, nose and mouth — the system can recognize seven basic emotions with an average accuracy rate of 95 percent in real-world scenarios. The basic emotions are anger, disgust, fear, happiness, sadness, surprise and neutral.

While existing artificial intelligence often struggles with nuance, the HUST team's algorithm can identify 15 "compound" expressions — emotions made of two or more feelings, such as "happily surprised" or "fearfully disgusted". The system boasts a 70 percent precision rate in these complex categories, a figure the team describes as "rare" in the current field.

"The human face is divided into dozens of action units, each corresponding to the muscle movements of specific areas such as the eyes, nose, mouth and eyebrows," Yu said. "For example, happiness is typically represented by raised cheeks, upturned corners of the mouth, and an open mouth, while anger involves furrowed brows, tightened eyelids and a tense mouth."

By capturing the detailed movements, the algorithm allows robots to determine the corresponding emotional category by eliminating the influence caused by an individual's inherent characteristics.

Previous technologies have struggled to accurately decompose the primary and secondary emotions in complex expressions, and they also tend to miss subtle, fleeting movements such as micro-expressions.

Yu's team tackled the challenge by establishing a logic to identify the dominant emotion first, better capturing subtle movements, and optimizing the model through training on massive datasets.

"In real-world scenarios, the emotion of hatred is often formed by the combination of multiple basic emotions such as anger and disgust," she said. "Most systems on the market, upon detecting signals such as frowning or tightened eyelids, usually directly classify them as anger.

"In contrast, our algorithm can not only identify strong components of anger but also capture subtle facial movements such as a slight raising of the lower lip, thereby perceiving the underlying disgust. This enables a deeper understanding of human emotional states," Yu added.

According to data from the Gaogong Industry Research Institute, sales of China-made humanoid robots were expected to reach 18,000 units in 2025, a surge of more than 650 percent compared with the previous year. Domestic shipments are projected to climb to 62,500 units in 2026.

Natural smiles

The breakthrough extends beyond digital recognition into physical mimicry. Traditional robots are often limited to simple "open-and-close" mouth movements, leading to an unnatural "segmented" appearance.

The robots developed by Yu's team are capable of generating a wide range of both basic and compound facial expressions. The robot's face has 20 movable points, which can be freely combined into different movements according to its mechanical structure. This is achieved through precise control over all key facial components, including the eyes, eyelids, nose, mouth and neck.

"For example, the raised eyebrows and curled lip involved in a 'disgustedly surprised' emotion can be reproduced with high fidelity," said Zhao Huijuan, a doctoral student and member of the research team.

Through a specialized mechanical transmission system, the robot can move its lips in three dimensions — forward, backward, left-right and circular. This allows the machine to reproduce 46 phonemes and nearly 20 distinct mouth shapes, including consonant sounds like "b" and "p" that require complex airflow obstruction.

The team has also upgraded the linkage mechanisms for the nasal alae, cheeks and malar regions. Using precise mechanical transmission, these areas can produce subtle and specific expressions such as laughing and crying, overcoming the unnatural "segmented movement" often seen in traditional robot faces.

Real-time voice and visual signals are fed into an emotion recognition model, which not only determines the emotional category that should be expressed, but more importantly, calculates the intensity of each facial action unit's movements under that emotion.

Yu said that a truly natural expression linkage is not simply about triggering expressions, but rather the coordination of language understanding, emotion judgment and expression generation.

A robot must not only understand what is being said, but also the context, the rhythm of the conversation, and the speaker's intent, in order to decide when to nod, when to remain neutral, and when to make subtle emotional changes, she added.

Practical application

The team's "key technologies and applications of facial action perception and emotion understanding for complex human-robot interaction" took home second prize in the technological invention award category in a competition in Hubei province in early January.

The technology is already moving from the lab to the field. A pilot project led by the Central Committee of the Communist Youth League of China has deployed this digital interaction system in dozens of schools across China. The system acts as a psychological consultant, "listening" to student complaints and adjusting its feedback based on their facial expressions.

In residential communities, the robots are being tested as companions for the seniors living alone. By providing "natural, credible and comfortable" emotional interactions, the machines offer support in scenarios where family members cannot be present.

The team expects the technology to expand into shopping malls, banks and the metaverse in the near future.

Yu cautioned, however, that understanding emotion does not mean the robot itself has emotions, adding that the technology can provide care and support functions, but it should never replace human social exchanges.

Contact the writers at chenmeiling@chinadaily.com.cn